Article

Data Lakes vs. Data Warehouses: Which Model Fits Your Use Case?

•

read

Six months ago, your startup's data lived in a single database. Today, you're drowning in customer data from six different platforms, and your operations team is asking for highly specific analytics. Manual data queries on production databases don’t scale, and with engineering teams stretched thin, you need a dedicated intelligence layer that unlocks insights without affecting the customer-facing systems.

Modern businesses generate data everywhere: user interactions, logs, and third-party integrations. You need data to create informative business analytics, and your data architecture determines what's analytically possible. Production databases optimized for transactions aren't designed for the complex queries that drive business intelligence.

Two popular approaches dominate the solution space: **data lakes,** which maximize flexibility, and **data warehouses, which emphasize structure.**

Data Lakes

Data lakes are ****repositories that store heterogeneous heterogenous data in raw format: images, tabular data, videos, and audio files, all are stored as they are.

Unlike conventional databases, a data lake’s storage structure is defined when reading data, known as schema-on-read. This means that new queries take longer to run, but with more flexibility than queries within a data warehouse.

These tradeoffs are ideal for experiment-driven use cases, such as machine learning or data science. Imagine tuning a user’s feed with a recommendation algorithm — a data lake empowers your data science team to experiment with new tweaks and transformations to maximize the performance of the algorithm. The pre-existing structure of a data warehouse would slow them down.

Storing Data in a Data Lake

Data is shared from production databases to the data lake on a regular basis, as often as real-time. Because there is no data transformation bottleneck, data can be much fresher in a data lake than in a data warehouse.

Data storage costs are also 2-5x cheaper than for data warehouses. This is because data is typically compressed and stored in cheap object storage (such aslike S3 or Azure Blob) with compute resources provisioned only when needed. There are also no persistent indexes, staging tables, materialized views, or multiple data copies that warehouses require for their schema-on-write approach.

Querying Data in a Data Lake

To enable querying, data lakes utilize query engines that sit on top of storage. The engines serve as a translation layer between user-generated queries and the raw data stored in files in storage.

Specifically, the query engine is responsible for:

- Schema inference: Examining the query and file headers to generate structure on-the-fly

- Distributed processing: Breaking up the query across multiple compute nodes

- File scanning: Minimum necessary reads of files or partitions

- In-memory processing: Loading data chunks into RAM for computation

Let’s make this concrete with an example. Imagine that a data engineer runs the following query to analyze a specific customer’s value for Q4 2024:

SELECT customer_id, SUM(amount)

FROM s3://my-lake/transactions/year=2024/

WHERE date >= '2024-10-01'

To process this query, an engine would:

- Scan only the ‘2024’ partition folders

- Read files matching the date filter

- Aggregate the data across compute nodes

- Return results

In practice, queries tend to be far more complex because data is not structured ahead of time, and distributed computing behavior must be understood for performance optimization - such as partitioning strategies, file formats, and cluster sizing. To unlock the full potential of a data lake typically requires data engineers or data scientists with distributed computing expertise.

Additionally, the distributed computing model means that date lakes offer variable performance compared to a data warehouse, where indices and pre-existing schemas maximize speed. However, because structure is generated on-the-fly, data lake queries are freed from the structure of predefined schema, enabling rapid experimentation and flexibility.

Key Benefits of Data Lakes

In conclusion, the key benefits of a data lake are

- Increased flexibility of data queries: No pre-defined schema to restrict queries

- Lower storage costs: Cheap object storage with pay-per-use compute

- Real-time data ingestion : Stream data directly without transformation bottlenecks.

- Future-proofing: Preserve raw data for unknown future use cases and evolving analytics needs.

Generally speaking, data lakes are ideal for use cases where future queries are not fully mapped out, like ML or data science. However, when you need fast, consistent reporting on well-understood business metrics, the structured approach of a data warehouse often provides better performance and easier maintenance.

Data Warehouses

Data warehouses are structured repositories designed for data that is already transformed and organized for specific business needs: aggregated customer records, fully processed transactions, and calculated metrics, stored in predefined table structures.

Structure is defined when writing data, known as schema-on-write. This means that data must fit into predefined schemas before storage, which enables fast, consistent queries once loaded.

These tradeoffs are ideal for business intelligence and reporting use cases. Imagine generating monthly revenue reports at regular intervals: a data warehouse empowers your business analysts to quickly access reliable, structured data using familiar SQL skills. The flexible experimentation of a data lake would be overkill for these predictable reporting needs.

Storing data in a data warehouse

A data warehouse schema is defined upfront according to business needs. Then, data is extracted from production databases through an ETL (Extract, Transform, Load) process to transform that data into the data warehouse schema. These are organized into historical snapshots so trends can be mapped over time.

The ETL process ensures data quality and consistency: cleaning duplicates, standardizing formats, and joining related information before storage. However, this upfront work creates a transformation bottleneck, meaning warehouse data can at best offer hourly snapshots for critical data, but most commonly 12-24 hours after data is recorded in production systems.

The overall result? Business-ready data that analysts can use immediately, though with a delay.

Querying data in a data warehouse

Because data is already organized into a defined schema, simple SQL queries generally organize the data into the reporting structure needed. Business users can write straightforward queries like SELECT region, SUM(revenue) FROM sales WHERE date >= '2024-01-01' without complex joins or data cleaning. Warehouses can support more complex analytical SQL as well: advanced window functions, statistical calculations, and sophisticated business logic, though the increased complexity can conflict with the predefined structure.

Key Benefits of Data Warehouses

In conclusion, the key benefits of a data warehouse are:

- Fast, consistent structured queries with predictable performance

- Reduced need for specialized data engineering skills for querying data

- Business-ready data that analysts can use immediately without transformation.

Data warehouses excel when your analytical needs are well-defined and performance is critical. Data lakes shine for exploratory analysis and diverse data types but sacrifice query performance and require specialized skills. However, each approach has inherent limitations that have led to the emergence of hybrid solutions attempting to capture the best of both worlds.

Hybrid Solutions

For years, organizations faced a binary choice between lakes and warehouses, each with fundamental tradeoffs.

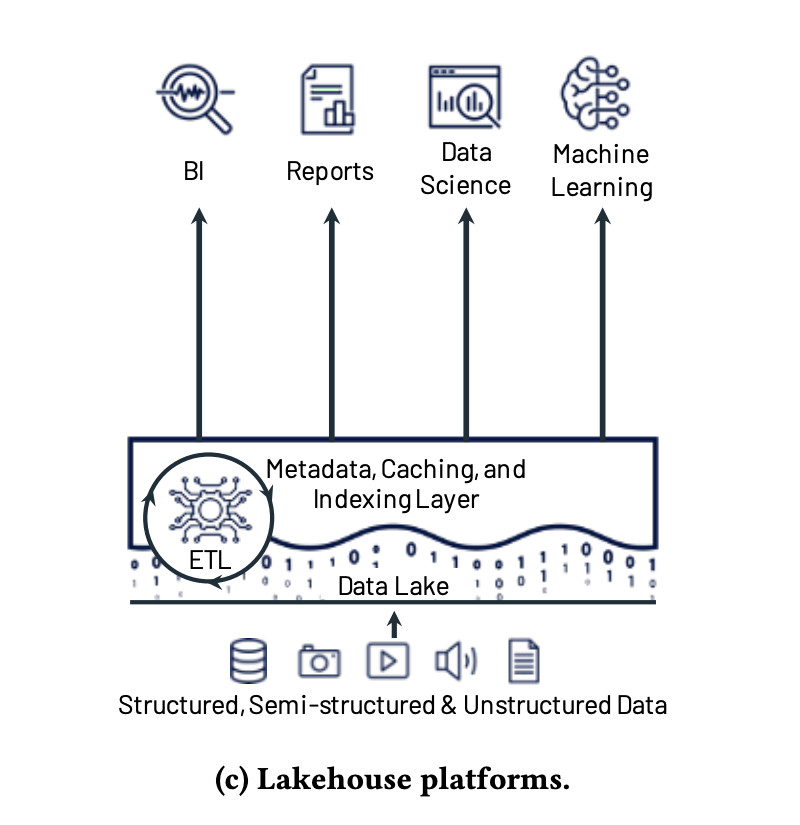

In recent years, data lakehouses emerged as an alternative that combines the flexibility of lakes with the performance characteristics of warehouses. Like data lakes, data lakehouses store diverse data formats in object storage at low cost. However, they add a metadata layer that provides warehouse-like features: ACID transactions, schema enforcement, and indexing, directly on top of raw files.

A diagram of Lakehouse platforms (Armbrust et.al)

However, this approach still requires setting up a new data infrastructure and delays from copying data over on a regular basis. Archil solves that problem with a unique architecture that builds a metadata layer directly on top of production blob storage. With this approach, Archil offers many of the key benefits of data lakehouses with minimal data engineering investment.

If you’re looking for a solution that enables both experimentation and speed of structured queries, a hybrid solution may be less costly than standing up both a data lake and data warehouse.

Data Lakes vs Data Warehouses: Which one is right for me?

Let’s compare these two approaches to improving structure of data for analytics:

Final thoughts

Data lakes and data warehouses are two popular approaches for enabling data analysis on top of production data. Data lakes offer maximum flexibility, whereas data warehouses offer maximum performance and speed.

Finally, if you’re looking for a compromise between flexibility and performance, consider hybrid solutions like data lakehouses and Archil, which offer the best of both worlds and may prevent diversion of resources away from other critical business functions.

Authors