Article

What are S3 Vectors, and Where Does Archil Fit In?

•

read

Generative AI has revolutionized how we think about data.

It’s no longer just about storing information; it’s about understanding the hidden relationship between concepts. In this new paradigm, the ability to connect, cluster, and find patterns isn’t just a feature; it’s the foundation of artificial intelligence.

This shift has driven growth for vector databases, with embeddings now powering RAG and advanced search applications. However, traditional storage systems weren't designed for vector-based paradigms. While S3 provides scale and durability, it lacks similarity search capabilities essential for vector workloads.

As AI applications increasingly rely on vector-centric operations, specialized storage solutions become necessary. Amazon answered part of this challenge with the release of S3 Vectors, integrating vector capabilities directly into S3 and eliminating the need for intermediary indexing systems.

However, vector storage and retrieval are just one challenge.

While S3 Vectors provides indexing and searching capabilities at scale, S3 remains fundamentally an object store—optimized for durability and scale rather than low-latency access or real-time workflows. This is where Archil enters the picture, addressing the next layer of the problem: making vector data feel instantly accessible and ready for interaction.

What is Amazon S3 Vectors?

S3 Vectors represents Amazon’s leap into vector-native storage.

Launched on July 15, 2025, this purpose-built storage solution significantly reduces storage costs for vector operations while enabling businesses to maintain AI-ready data at scale without compromising affordability.

At its core is the concept of vector buckets: an innovative data modeling concept that provides specialized interfaces with a dedicated set of APIs and structures, known as a vector index, for efficient storage and querying of large vector datasets. These buckets eliminate the need for infrastructure provisioning by abstracting the organization of vector data within indexes, making similarity search queries more streamlined and supporting the vector store option for AI workloads.

Each vector bucket supports up to 10,000 vector indexes, with individual indexes capable of storing tens of millions of embeddings. This means you can manage billions of embeddings in a single S3 Vector deployment! S3 Vectors can hold tens of millions of vectors per index, supporting massive data sets and providing instant access to large vector datasets for high-performance AI workloads.

The system enhances performance through metadata attachment to vectors, which accelerates conditional queries based on parameters like dates or categories. Users can attach metadata as key-value pairs to vectors, enabling filtering and optimization for future queries based on user preferences, dates, or categories.

For example, attaching metadata as key-value pairs allows users to filter future queries based on context, such as retrieving only vectors relevant to a specific data set or user preferences.

Additionally, a continuous background optimization process refines vector data arrangements to maximize price-performance ratios. S3 Vectors is designed to deliver the best possible price performance and lower storage costs, especially for infrequently queried vectors and massive amounts of unstructured data.

These storage features deliver substantial technical benefits, particularly through being natively integrated with Amazon Bedrock Knowledge Bases and Amazon SageMaker Unified Studio, enabling efficient vectorized RAG pipeline implementation with minimal architectural overhead. This native integration with the knowledge base and SageMaker Unified Studio enhances search capabilities and semantic search results for AI applications.

Furthermore, the seamless integration with Amazon OpenSearch Service enables intelligent **data tiering.** S3 Vectors leverages object storage within the AWS cloud to optimize total cost and store access for vector store option use cases.

This optimizes storage economics in two ways. Low-frequency vector queries stay in S3's cost-effective storage layer. High-demand vectors are dynamically migrated to OpenSearch's in-memory indices.

The result?

An automated performance-optimized architecture with sub-millisecond vector retrieval. This speed is essential for AI applications requiring near-instantaneous responses.

As demands increase, S3 Vectors adapts to ensure low-cost, high-performance, and scalable storage solutions for querying vectors and enhancing search capabilities.

What S3 Vectors Doesn’t Do

S3 Vectors represents a major leap forward by bringing vector storage, indexing, and similarity search directly into S3—and its native integrations with Amazon Bedrock Knowledge Bases, SageMaker Unified Studio, and OpenSearch Service make building vectorized RAG pipelines far simpler.

For many applications, this eliminates the need for separate vector databases or complex indexing infrastructure.

However, at its core, S3 remains an object store—specifically, a form of object storage designed for durability and scalability. While S3 Vectors is a powerful storage solution for large-scale vector data, it may not be optimal for every type of data set or workload, especially those requiring lightning-fast, iterative, or computation-intensive operations that modern AI applications demand.

This distinction matters when you move beyond basic storage and search into real-world, production workflows.

Key Gaps in S3 Vectors

Despite its capabilities, S3 Vectors still presents several limitations when it comes to AI workflows:

- Lack of Local-Like Interactivity — S3 Vectors operates through APIs designed primarily for batch or query-based operations. Developers cannot mount a vector bucket to interact with embeddings as if they were local files. S3 Vectors cannot be mounted as local file systems, which limits the ability of application developers to interact with vector data in the same seamless way they would with traditional files. Each operation requires network calls and serialization/deserialization, creating friction during development, iteration, and debugging.

- High Latency for Iterative Workloads — While S3 Vectors excels at large-scale similarity queries, it isn’t optimized for low-latency, high-frequency access patterns. Tasks requiring rapid iterations (fine-tuning, vector transformations, or streaming updates) face performance bottlenecks due to repeated network round-trip.

- Limited Multi-Cloud and Local Integration — S3 Vectors is tightly integrated with AWS services but presents challenges in hybrid or multi-cloud environments. Integration across different environments requires additional infrastructure to bridge APIs or replicate data across systems.

- Friction in Downstream Computation — When you need to perform local analytics, batch transformations, or integrate with external compute environments, data must be extracted from S3 Vectors first. This data movement adds time, cost, and complexity to your workflow.

Archil: Transform S3 Data Access with Local-Like Performance for AI Workflows

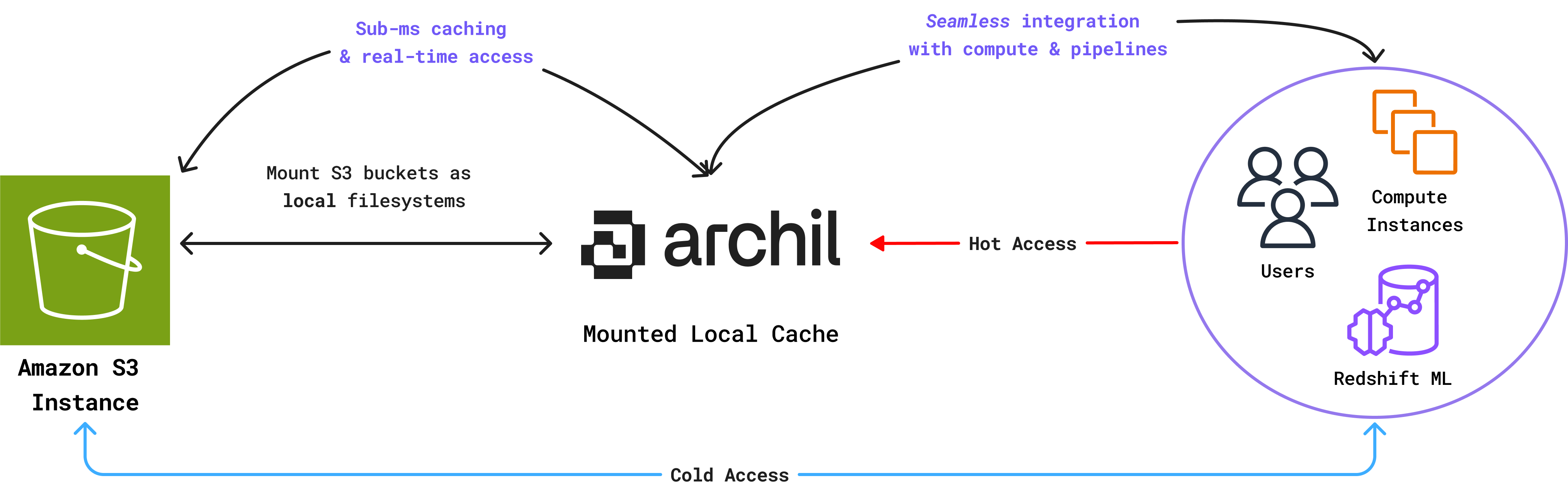

While Amazon S3 Vectors represents a significant advancement in scalable vector-native storage, it addresses only part of the AI data workflow challenge. Archil fills the critical gaps in developer interactivity, real-time prototyping, and cross-cloud portability.

With Archil, your Amazon S3 buckets function like local files, providing immediate, frictionless data access from any compute environment. This eliminates the traditional friction points when working with cloud storage.

Archil's file-based interface simplifies how you interact with S3-backed data across various scenarios, including:

- Stateful AI Agents — Enable AI agents to maintain state and work with large datasets through fast, shared access to training data and model artifacts

- RAG with Unstructured Data — Build retrieval augmented generation systems that efficiently process and retrieve from large datasets like PDFs, images, and audio files

- Financial Services — Process market data with POSIX-compliant storage that maintains an audit trail while providing high-performance access, whether you're conducting local experiments, streaming data to GPU workflows, or building hybrid pipelines. No specialized SDKs required, just interact with your data as you would with a local file system.

This local-like access to cloud object storage represents a crucial advancement for modern AI workflows. Though Archil and Amazon S3 Vectors aren't currently integrated, we're actively developing this connection.

The combination of Amazon's robust vector indexing capabilities with Archil's real-time access layer would significantly enhance AI pipelines across different clouds and compute environments.

Stay tuned for updates on this powerful integration of S3 Vectors and Archil, combining robust vector indexing with real-time access capabilities. The data world is about to get a whole lot smarter, faster, and more intuitive.

Authors